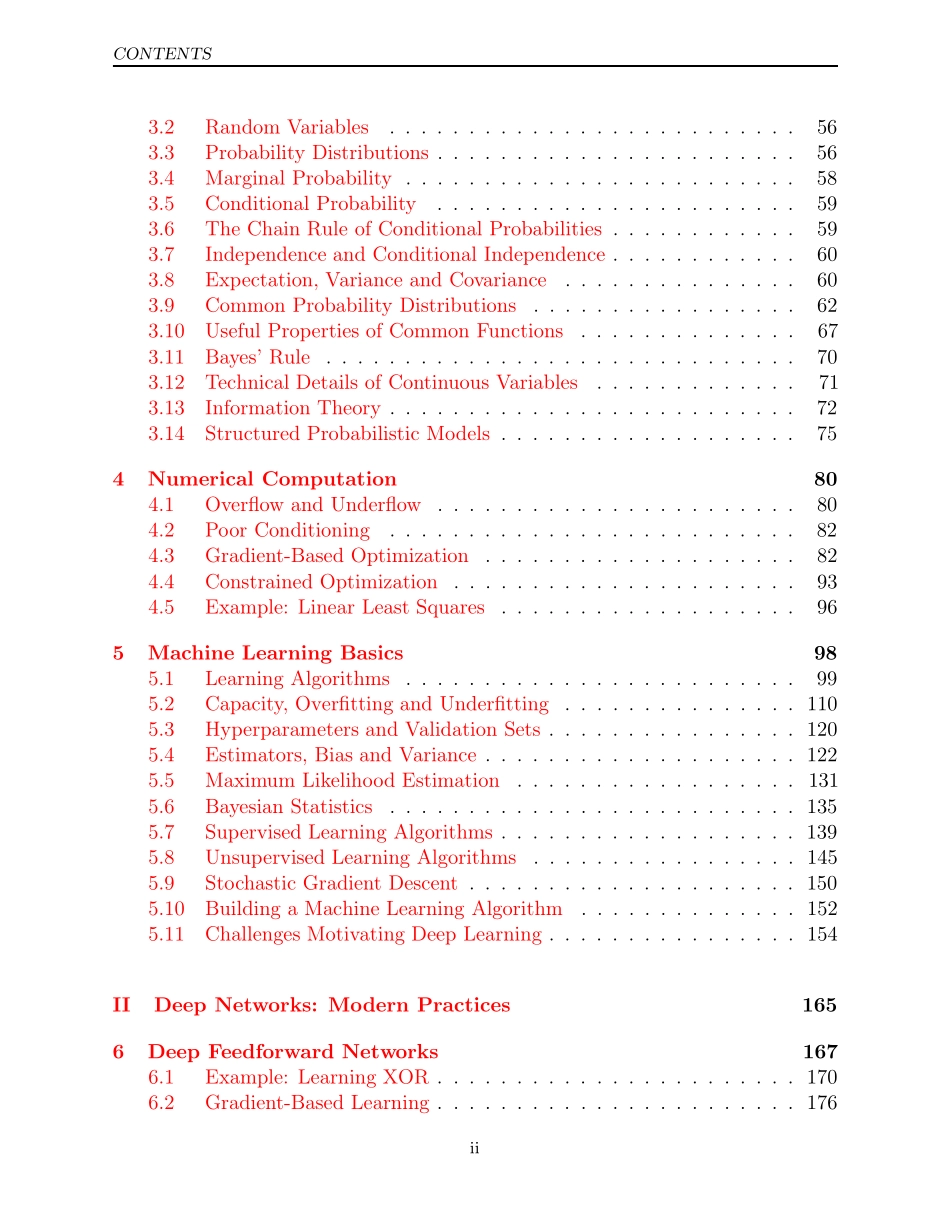

DeepLearningIanGoodfellowYoshuaBengioAaronCourvilleContentsWebsiteviiAcknowledgmentsviiiNotationxi1Introduction11.1WhoShouldReadThisBook?....................81.2HistoricalTrendsinDeepLearning.................11IAppliedMathandMachineLearningBasics292LinearAlgebra312.1Scalars,Vectors,MatricesandTensors...............312.2MultiplyingMatricesandVectors..................342.3IdentityandInverseMatrices....................362.4LinearDependenceandSpan....................372.5Norms.................................392.6SpecialKindsofMatricesandVectors...............402.7Eigendecomposition..........................422.8SingularValueDecomposition....................442.9TheMoore-PenrosePseudoinverse..................452.10TheTraceOperator.........................462.11TheDeterminant...........................472.12Example:PrincipalComponentsAnalysis.............483ProbabilityandInformationTheory533.1WhyProbability?...........................54iCONTENTS3.2RandomVariables..........................563.3ProbabilityDistributions.......................563.4MarginalProbability.........................583.5ConditionalProbability.......................593.6TheChainRuleofConditionalProbabilities............593.7IndependenceandConditionalIndependence............603.8Expectation,VarianceandCovariance...............603.9CommonProbabilityDistributions.................623.10UsefulPropertiesofCommonFunctions..............673.11Bayes’Rule..............................703.12TechnicalDetailsofContinuousVariables.............713.13InformationTheory..........................723.14StructuredProbabilisticModels...................754NumericalComputation804.1OverflowandUnderflow.......................804.2PoorConditioning..........................824.3Gradient-BasedOptimization....................824.4ConstrainedOptimization......................934.5Example:LinearLeastSquares...................965MachineLearningBasics985.1LearningAlgorithms.........................995.2Capacity,OverfittingandUnder...